Often, when we talk to potential clients about conducting a Fundamental Rights Impact Assessment, we run into blockers from compliance teams who argue the law is already aligned with ethical fundaments. The perception is not completely misguided, however, there are gaps. We are going to use the scandal against Apple Card and Goldman Sachs from 2019 to showcase this gap.

The scandal:

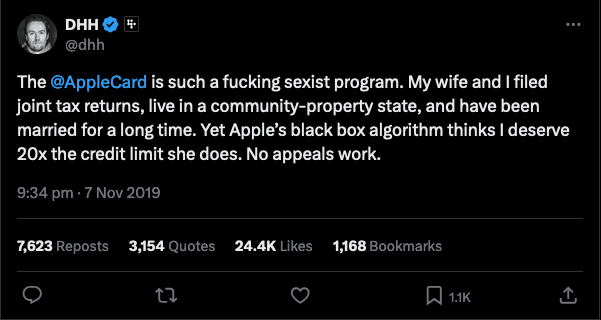

A prominent software engineer, David Heinemeier Hansson who developed the Ruby on Rails Framework, with 350,000 followers at the time of his post in November 2019, published on X (Twitter) the following accusation:

It didn’t take long for Linda Lacewell, a NY regulator from the Department of Financial Services (DFS), to launch an investigation to understand if the credit limit decisions violate the Equal Credit Opportunity Act which prohibits discrimination based on gender amongst other criteria.

The result:

The investigation showed the credit limit “did not produce evidence of unlawful discrimination”. Hence, the companies were not doing anything illegal.

If it was not illegal, why were people angry?

This is a case where law, company policies, and ethics are not aligned, resulting on customers publicly complaining of unfair treatment.

Goldman Sachs was the underwriter of the data used to make decisions about the credit limits on Apple Card. When the DFS looked at the Bank’s policies, they validated the algorithm was not making decisions based on gender because the model didn’t include this information given it’s a “prohibited characteristic” under the Fair treatment law. However, married women who shared finances with their spouse, did receive 20 times less credit limit, and this is the issue of unfair treatment that customers are angry for. Ultimately, the legal framework discriminates as it prevents women to purchase a house, start a business, support their children, pay for education, pay for health insurance… anything they need credit for.

Why was the model unfair to women?

The DFS investigation method falls short of the standards used by algorithm auditors like us at Thrace Labs. There are two main standard approaches to demonstrate how the model is unfair: proxies and false predictions.

Proxies are data that can be used to identify people’s prohibited characteristic (their gender, political affiliation, location, religion…). Microsoft and EY did their own analysis on the Apple Card scandal using a publicly available dataset on credit card loans in Taiwan from 2005. They created a new characteristic that shows someone’s interest rate with them aim to include it as an additional factor for the model. However, they demonstrated that interest rate serves as a proxy to identify if the person is a man or a woman. This means that using ‘interest rate’ to determine a credit score has the risk to discriminate (even if using it is legal).

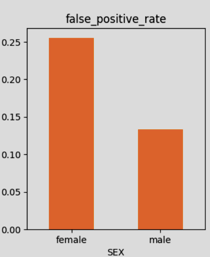

False predictions look at the errors rates in the result for a given group of people. In the same analysis, Microsoft and EY built a simple model to investigate this approach. They used data about credit utilisation and missing payments (which appear in the Bank’s policy) to build a system that predicts if a person is likely to push its credit loan payment to the next month. The results showed that women were more likely to receive false predictions: they were more often categorised as going to default their payment when they actually did not (false positive). This means the model risks to discriminate based on gender and the companies need to introduce techniques such as Fairlearn to adjust their models.

A few days later, when the post kept gaining heat on social media causing 24.4k likes and thousand responses, Steve Wozniak, the Apple co-founder replied to Heinemeier sharing he had a similar experience.

Image credits: Microsoft and EY analysis on credit loan decisions.

Even if the system is not set-up to discriminate, the result does. And these two approaches demonstrate it. Companies should use common standards used by algorithm auditors to build and or maintain their services to ensure they follow the law and also best practices.

Is it the Bank’s fault? A question of damage to brand reputation

DFS investigators showed that people have a wrong perception on how credit limits are given. They showed the Bank’s policy is in line with the law as they use credit score, indebtedness, income, credit utilisation, missed payments, and other credit history elements to determine it.

The DFS investigators also analysed data about a specific couple that complained on X (Twitter). They showed the husband had multiple credit cards under his name, with a history of paying loans (cards, university, car, mortgage) while his wife had only one credit card and was not included in the house loan. The DFS investigators were able to demonstrate this is the reason why she received 20 times less credit. She had not built a credit history by herself, and therefore the bank considered her a high-risk. The Bank and DFS argued that people in married couples are not granted the same limits as is the individual’s history that counts.

The perception of unfair treatment caused people to complain publicly, as well as the fact that Goldman Sachs (also in charge of customer support) was not able to address the complaints in a timely manner.

Following the public complaints, Apple and Goldman Sachs launched a learning program called Path to Apple Card that guides people to understand how Goldman Sachs makes decisions about their creditworthiness and shows them steps to build good credit.

With this approach, both companies addressed the public’s concern of the ‘black-box’ algorithm and the perception of unfairness. Under the realm of actions on Responsible AI, this will be part of the Transparency activities companies can implement to build trust with their customers.

In today’s European legislative context:

The AI Act was published July 12th 2024, entering into force August 1st 2024, giving companies time to conform with the requirements. If Apple Card was to be investigated August 2nd 2026, it would need to demonstrate they meet the requirements for high-risk AI systems - given creditworthiness falls under this category.

What does this mean?

At a high-level, companies developing and using software used to determine creditworthiness will have to:

Develop a Risk Management System that identifies, mitigates, and monitors risk to health, safety or fundamental rights. This includes conducting a fundamental rights impact assessment that helps companies identify the risk. In our case, fundamental rights risk to creditworthiness includes the risk on: freedom to choose an occupation and right to engage in work (ie, credit to pay for education); freedom to conduct a business (ie, credit to start a business); right to property (ie, mortgage); rights for non-discrimination (ie, underlined in the previous examples); right for equality between women and men (ie, an argument shown with Apple Card); and others that can be identified by undergoing our Fundamental Rights Impact Assessment.

Conduct tests to prevent and mitigate bias in data used to train and validate the models used to determine creditworthiness.

Create technical documentation that shows the company is doing the necessary to minimise risk.

Establish automatic logging while the model is being developed to ensure there is a track record of the decisions made into developing the system that can be analysed and used as part of the technical documentation and risk management system.

Do transparency actions such as explaining to customers how the credit system works (something Apple and Goldman Sachs are already addressing with their Path to Apple Card Program).

Define human-oversight measures that allow people to monitor the outputs from the model.

Define accuracy, robustness, and cybersecurity measures to ensure the credit model is in line with the risk management system.

Moreover, the European Commission commissioned CEN/CENELEC (European Committee for Electrotechnical Standardisation) to draft ten standards that guide companies in demonstrating conformity with the AI Act and is expected the standards are adopted as European harmonised standards. Thrace Labs has been actively involved in their development since January 2024.

Conclusion:

Legal teams can help companies meet the law but they need to work with people knowledgeable of ethics in AI, fundamental rights, and technical standards to ensure the company is developing robust policies. They also need to work with AI Ethics Champions across the organisation who can help implement and monitor the implementation of such policy.